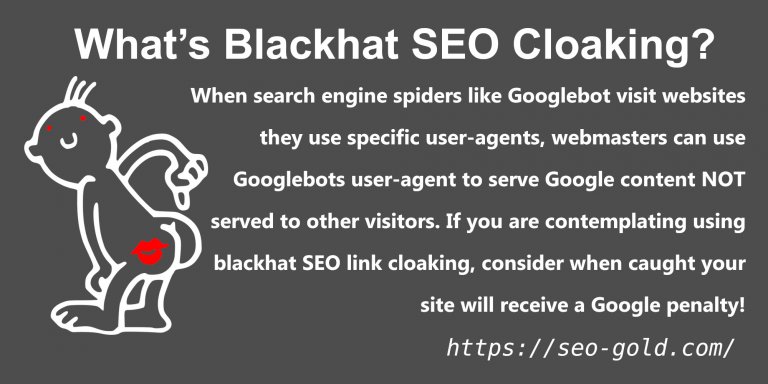

When search engine spiders like Googlebot visit websites they use specific user-agents, webmasters can use Googlebots user-agent to serve Google content NOT served to other visitors. If you are contemplating using black hat SEO link cloaking, consider when caught your site will receive a Google penalty!

For example you might have links in an image-map, image-map links can not be indexed by Google, so it would be acceptable to serve Googlebot those links in another format like text links. General visitors see the links in an image-map, Googlebot sees them as text links or standard image links (a clickable image link).

As long as a general visitor and Google sees pretty much the same content AND you aren’t gaming Google, it’s acceptable to use cloaking.

I use another form of cloaking for the “Subscribe” to comment link (look above the “Submit Comment” button below). This link goes to webpages (the URLs change for each Post and visitor: potentially a LOT of these webpages and they’d be close to duplicates) I don’t want indexed and Google wouldn’t want indexed. The webpages have a noindex robots meta tag (Google shouldn’t index them) and the links are cloaked using JavaScript so Google doesn’t spider them: Google ‘sees’ the “Subscribe” link as body text. Update 2020: this type of JavaScript link cloaking no longer works in 2020, Google can ‘see’ the DOM (roughly what we see in a browser) so Google can ‘see’ my hidden JavaScript links.

Continue Reading Black Hat SEO Link Building Techniques to Avoid - Discourse.org Hidden Links